This article provides an introduction to Generative AI models, Large Language Models as well as reference to tools which can assist your organization in building your own AI LLM.

Introduction to GenAI and LLM

Generative AI refers to a subset of artificial intelligence focused on creating models that can generate new content, such as images, text, or music, based on patterns learned from existing data. It involves utilizing techniques like deep learning and probabilistic modeling to generate outputs that resemble the training data. Generative AI models are capable of producing new and unique content beyond replication.

Large Language Models (LLMs) are a specific type of generative AI model that specializes in natural language processing. They are trained on vast amounts of text data and have the ability to generate coherent and contextually relevant human-like text based on a given prompt or input. LLMs, such as OpenAI's GPT-3, can understand and generate text in a wide range of topics and writing styles.

Machine Learning and Data Science Platforms

Building AI solutions requires a Machine Learning (ML) and associated data science platform. Examples of the ten most prominent data science and machine learning platforms, along with a brief description of each, are the following.

- Python (Open Source)

- Description: Python is a popular open-source programming language for data science. It has a vast ecosystem of libraries and frameworks, such as NumPy, Pandas, scikit-learn, TensorFlow, and PyTorch, which provide extensive functionality for data analysis, machine learning, and deep learning.

- Download URL: https://www.python.org/downloads/

- R (Open Source)

- Description: R is another open-source programming language commonly used for statistical analysis and data science. It offers a wide range of packages and libraries for data manipulation, visualization, and modeling. RStudio is a popular integrated development environment (IDE) for R.

- Download URL: https://www.r-project.org/

- TensorFlow (Open Source)

- Description: TensorFlow is an open-source machine learning platform developed by Google. It provides a comprehensive ecosystem for building and deploying machine learning models, including tools for data preprocessing, neural networks, model optimization, and serving.

- Download URL: https://www.tensorflow.org/

- PyTorch (Open Source)

- Description: PyTorch is an open-source machine learning library developed by Facebook's AI Research lab. It is widely used for deep learning tasks and offers dynamic computation graphs, allowing for flexible model development. PyTorch provides tools for building neural networks, autograd, and distributed computing. PyTorch provides a flexible and efficient platform for training custom LLMs using various neural network architectures. PyTorch offers a rich ecosystem of libraries and tools for natural language processing.

- Download URL: https://pytorch.org/

- Apache Spark (Open Source)

- Description: Apache Spark is an open-source cluster computing framework that provides distributed data processing and analytics capabilities. It supports various programming languages and offers high-level APIs for batch processing, real-time streaming, machine learning, and graph processing.

- Download URL: https://spark.apache.org/downloads.html

- KNIME Analytics Platform (Open Source/Commercial)

- Description: KNIME is an open-source data analytics platform that allows users to visually create data workflows using a drag-and-drop interface. It provides a wide range of data manipulation, transformation, and modeling capabilities. KNIME offers both open-source and commercial editions.

- Download URL: https://www.knime.com/downloads

- RapidMiner (Commercial)

- Description: RapidMiner is a commercial data science platform that offers a visual workflow designer for building data analytics and machine learning models. It provides a wide range of data preprocessing, modeling, and evaluation tools. RapidMiner supports integration with various data sources and offers collaboration features.

- Download URL: https://rapidminer.com/get-started/

- DataRobot (Commercial)

- Description: DataRobot is an automated machine learning platform that enables users to build and deploy machine learning models without extensive programming knowledge. It automates several steps of the machine learning workflow, including data preprocessing, feature engineering, model selection, and deployment.

- Download URL: Contact DataRobot for more information.

- Microsoft Azure Machine Learning (Commercial)

- Description: Azure Machine Learning is a cloud-based platform provided by Microsoft. It offers a comprehensive set of tools and services for building, deploying, and managing machine learning models. Azure ML supports both code-based and drag-and-drop model development workflows.

- Download URL: https://azure.microsoft.com/en-us/services/machine-learning/

- Google Cloud AI Platform (Commercial)

- Description: Google Cloud AI Platform is a cloud-based data science platform that provides managed services for building, deploying, and scaling machine learning models.

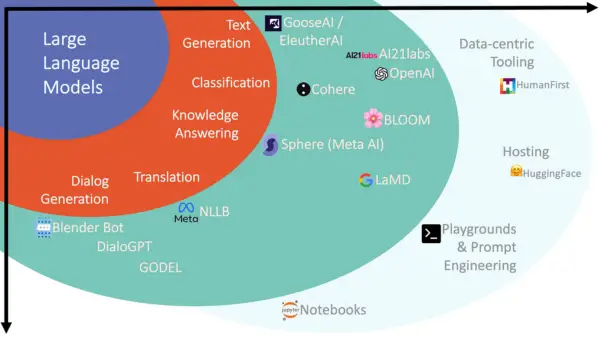

- GooseAI:

- Goose AI is a fully managed NLP-as-a-Service delivered via API. More details available at: https://goose.ai/.

- Google Lambda.

- LaMDA’s conversational skills have been years in the making. Like many recent language models, including BERT and GPT-3, it’s built on Transformer, a neural network architecture that Google Research invented and open-sourced in 2017. That architecture produces a model that can be trained to read many words (a sentence or paragraph, for example), pay attention to how those words relate to one another and then predict what words it thinks will come next. But unlike most other language models, LaMDA was trained on dialogue. During its training, it picked up on several of the nuances that distinguish open-ended conversation from other forms of language. One of those nuances is sensibleness.

- No Language Left Behind (NNLB).

- No Language Left Behind (NLLB) is a first-of-its-kind, AI breakthrough project that open-sources models capable of delivering evaluated, high-quality translations directly between 200 languages—including low-resource languages like Asturian, Luganda, Urdu and more. It aims to give people the opportunity to access and share web content in their native language, and communicate with anyone, anywhere, regardless of their language preferences.

AI Model repositories

The firrst natural step in creating and training a machine learning model is to utilize pre-trained models and algorithms. The following list provides the most prominent machine learning and AI model repositories.

- Algorithm Registry

- URL: https://algorithmia.com/algorithm-registry

- Review: Algorithm Registry, provided by Algorithmia, is a comprehensive marketplace for AI algorithms and models. It offers a wide range of pre-trained models and algorithms across various domains, including natural language processing, computer vision, and data analytics. The platform provides a seamless integration process and allows users to deploy AI algorithms in their applications with ease.

- Hugging Face Models Hub

- URL: https://huggingface.co/models

- Review: The Hugging Face Models Hub is a popular platform that hosts a vast collection of pre-trained AI models, primarily focused on natural language processing tasks. It offers a wide variety of models that can be directly used or fine-tuned for specific tasks. The platform also provides an interactive API, allowing users to easily integrate the models into their applications.

- Papers with Code

- URL: https://paperswithcode.com/

- Review: Papers with Code is a platform that combines academic research papers with their corresponding code implementations. It provides a comprehensive resource for discovering state-of-the-art AI models and algorithms across various domains. Users can explore papers, access code repositories, and reproduce results to leverage the latest advancements in AI.

- Kaggle

- URL: https://www.kaggle.com/

- Review: Kaggle is a popular online community and platform for data science competitions. It offers a rich collection of datasets, notebooks, and kernels that encompass a wide range of AI applications. Users can access pre-trained models, share their own models, and collaborate with a global community of data scientists and AI enthusiasts.

- ModelDepot

- URL: https://modeldepot.io/

- Review: ModelDepot is an AI model repository that provides a centralized platform for sharing and accessing pre-trained models. It covers various domains such as computer vision, natural language processing, and time series analysis. The platform offers easy-to-use APIs and integrations, enabling users to quickly incorporate AI models into their projects.

- Model Zoo by DeepMind

- URL: https://modelzoo.co/

- Review: Model Zoo is an open-source platform by DeepMind that hosts a collection of state-of-the-art machine learning models and code implementations. It covers a wide range of AI domains, including computer vision, reinforcement learning, and generative modeling. Users can explore and utilize models shared by the AI community and contribute their own implementations.

- Azure AI Gallery

- URL: https://gallery.azure.ai/

- Review: Azure AI Gallery, provided by Microsoft, is a marketplace that hosts a variety of AI models, solutions, and resources. It offers a range of pre-built models and solution templates that can be deployed on Microsoft Azure. The platform provides a user-friendly interface for discovering and integrating AI capabilities into Azure-based projects.

Building your own AI LLM

Taking this one step further, there is now the ability for a company to create its own LLM models and consume its own data for generative AI applications. Here is a list of ten platforms that enable companies to build their own large language models:

- Hugging Face Transformers

- Description: Hugging Face Transformers is a popular open-source library that provides a comprehensive set of tools and pre-trained models for natural language processing tasks. It allows developers to fine-tune existing models or build their own LLMs using Transformer architectures like BERT or GPT.

- URL: https://huggingface.co/transformers/

- OpenAI GPT-3 and GPT-4

- Description: GPT-3 (Generative Pre-trained Transformer 3) and GPT-4 by OpenAI is one of the most advanced LLMs. While OpenAI doesn't provide a platform specifically for building LLMs, GPT-3 and GPT-4 can be used via OpenAI's API to develop applications that leverage its powerful language generation capabilities.

- URL: https://openai.com/

- H2O.ai

- Description: H2O.ai offers the H2O-3 platform, which provides a range of tools for machine learning and data analysis. While it doesn't focus solely on LLMs, it offers capabilities for building language models using its AutoML functionality and various algorithms.

- URL: https://www.h2o.ai/

- Gensim

- Description: Gensim is an open-source Python library for topic modeling, document indexing, and similarity retrieval. While not specifically designed for LLMs, it provides tools for building word embeddings and can be utilized to train language models.

- URL: https://radimrehurek.com/gensim/

- Microsoft Turing

- Description: Microsoft Turing is an LLM developed by Microsoft Research. It is based on Transformer architecture and can be fine-tuned for specific tasks. While not directly accessible for building custom models, Microsoft offers APIs and services that leverage Turing's capabilities.

- URL:

- Google Cloud AutoML Natural Language

- Description: Google Cloud AutoML Natural Language is a platform that allows users to build custom natural language processing models. While it doesn't focus solely on LLMs, it provides capabilities for training models for tasks like sentiment analysis, entity recognition, and content classification.

- URL: https://cloud.google.com/natural-language/automl/docs/

- FastText

- Description: FastText is an open-source library developed by Facebook AI Research. It provides tools for training and representing text data, including support for LLMs. FastText is efficient and suitable for large-scale text classification and language modeling tasks.

- URL: https://fasttext.cc/

- SpaCy

- Description: SpaCy is an open-source library for natural language processing in Python. While it primarily focuses on linguistic features, it offers components for training custom language models. SpaCy provides a streamlined and efficient framework for building LLMs.

- URL: https://spacy.io/

- Ludwig

- Description: Ludwig is an open-source deep learning toolbox developed by Uber AI. While not specifically designed for LLMs, it provides an easy-to-use interface for training and deploying machine learning models, including natural language processing models.

- URL: https://ludwig-ai.github.io/ludwig-docs/

- Mozaic

- Description: Mozaic is an AI platform that allows companies to build their own large language models. It provides tools for data ingestion, preprocessing, training, and model deployment. Mozaic offers customization options to tailor the LLMs to specific use cases and provides APIs for integration with other applications and services.

- URL:

- Cohere

- Description. It’s never been easier to add AI to your products. Cohere’s models power interactive chat features, generate text for product descriptions, blog posts, and articles, and capture the meaning of text for search, content moderation, and intent recognition. Using Cohere’s powerful embeddings models, you can make your applications understand the meaning of text data at massive scale, unlocking powerful semantic search, classification, and rerank capabilities.

- URL: https://cohere.com/.

- AI21Labs

- We are at the start of a revolution in Natural Language Processing (NLP), or the ability of machines to understand and generate natural text. It’s no coincidence that language is a unique human ability, so making significant progress in NLP calls for considerable scientific and engineering innovations. AI21Labs aim to lead this revolution.

- URL: https://www.ai21.com/.

- HumanFirst

- HumanFirst.AI provides a no-code platform to increase the coverage and accuracy of your conversational AI on a continuous basis. Discover new intents and improve existing ones. Integrate with business intelligence data. Prepare fine-tuning datasets for LLMs from your labeled data. Store, explore, categorize and supervise the output of generative models. NLU & NLG design provides observable, testable and predictable LLM-powered app development.

- URL: https://www.humanfirst.ai/

- Eleuther.ai

- EleutherAI is a non-profit AI research lab that focuses on interpretability and alignment of large models. The development of transformer-based language models, especially GPT-3, has supercharged interest in large-scale machine learning research. Unfortunately, due to the high costs and unusual skill set required to advance in the field, the world of large-scale AI research today is dominated by a few large technology companies and start-ups. At EleutherAI, they believe that these technologies are both highly promising and potentially dangerous. However, they firmly do not believe that decisions about the future of these technologies should be restricted to the employees of a handful of companies that develop them for profit. Research into interpretability and alignment is of utmost importance for AI governance. As increasingly powerful machine learning systems are being developed and deployed, it is crucial that independent researchers are able to study them.

- URL: https://www.eleuther.ai/about

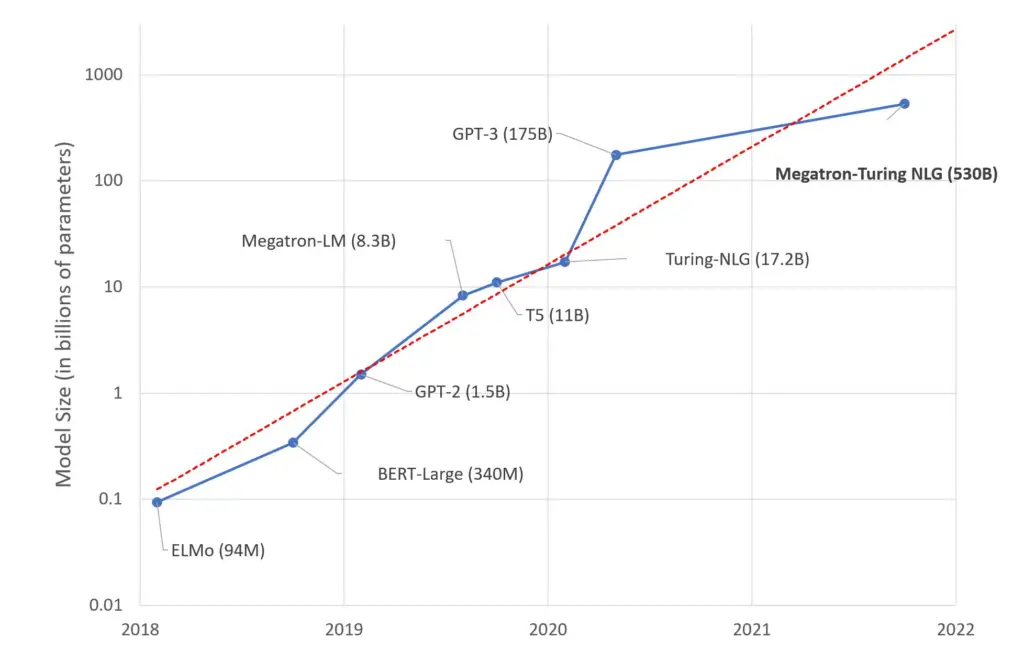

A new Moore's law for AI LLM

In a recent article in the Hugging Face ML/AI community, the technological and hardware developments of new AI models is presented. It is clearly shown that leaders in this area, in this case Microsoft and NVIDIA are pushing the limits when it comes to AI model number of parameters. We have in 2022 reached the official number of 530B (billion) parameters in the latest Megatron-Turing NLG model running on DGX A100 multi-GPU servers. This at this point seems to be an overkill and difficult to be implemented and utilized under realistic circumstances by the mass. However we need to take into account that, as the article suggests, researchers estimate that the human brain contains an average of 86 billion neurons and 100 trillion synapses. It's safe to assume that not all of them are dedicated to language either. We therefore see a gradual convergence of the number of human brain neurons and synapses to the AI model parameters.